The has been a daytime busy week with the physics colloquium at CSULB, "gruncle" activities with great nephew Jackson in San Diego, attending an AIAA meeting on climate science and attending the Quantum Summit at Caltech. It's been a busy week.

The Quantum Summit included half dozen presentations by quantum computer experts describing the progress being made to achieve the quantum computer dream. I probably doubled or quadrupled what I knew about quantum computing and that was only after taking lots of notes and pretending that I understood what was being described. I can only remember and provide this high level summary of

what I heard:

First, quantum computing does not involve new physics, but does require some solutions to tough fabrication and noise and environmental interference issues. It seems you need just the right amount of entanglement between the quantum circuits but no environmental interference until you make a measurement to get the answer.

Second, quantum computers will not be easy to program and will require special software running on classical computers to help control and get the answer. The big boys at Intel, HP, Google, Microsoft, Dwave dozens of university labs and more are busy trying to learn how to do it.

Third, they probably will not be very small in that most of the technologies used to build them require superconducting materials. The computer circuits, based on microwaves and phonons for example, rely on manipulating the superposition and entanglement properties already understood in quantum mechanics to perform parallel quantum type calculation exponentially faster than classical computers. Four basic types of circuit technologies are currently being evaluated and developed worldwide.

Fourth, if the develop process succeeds in building a computer with 100-1000 quantum bits, then some tough problems, such as factoring large numbers, can be solved very quickly. This capability will obsolete the currently used public key encryption technology.

Fifth, even though the code breaking capability is what catches a lot of attention, the most significant use of quantum computers will be in other areas such as protein folding problems, new material designs based on quantum mechanical properties and faster artificial intelligence.

Wow, I'm tired just thinking about it. But, hey let's consider one more astronomical lesson learned and look at one screenshot of the dats. As you recall, I've been struggling with how to make accurate photo metric and astrometric measurements, like measuring the visible magnitudes of stars. My measurements have been close to the star catalog values, but still not as accurate as I'm led to believe is possible for amateurs. For instance, my measurement, just using my camera without a telescope, of magnitude 5-7 stars, resulted in measurement that were within +\- 0.1 magnitude, to the +\- 0.01 magnitude goal.

My lessons learned so far included accounting for digitalization noise, saturation of pixels, pixel sensitivity and extracting the right magnitude data from the star catalogs. My lesson this morning involved pinning down the extent to which "vignetting" due to camera optics and design was affecting the amount of light collected from the star. If the amount of light collected varies according to where the star image is located in the camera sensor plane, then this will be a big source of error. Yes, vignetting, where the center of the image is much brighter than the edges is a big problem. This problem might not be noticed if you are just taking a picture of a person or a landscape, but for making photometry measurements it is a big deal.

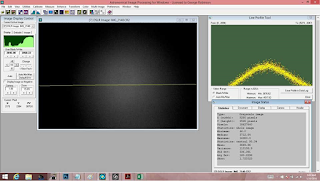

So, the screenshot below shows my flat image, taken this morning by just pointing the camera at the bright morning sky.

|

| Lens vignetting and correction shows up in this AIP4WIN screenshot |

The sky is mostly considered to be flat with the same brightness over wide areas. When I looked at the camera image, it looked very uniform, but when I turned up the contrast as shown in the image, then the typical vignetting with bright center and dim edges became apparent.

The yellow line in the first section shows the profile line over which the software measured the brightness and you can see in the right hand of the screenshot how the brightness varies across the image. This is not good if you are trying to compare two star magnitudes, one from say the center of the image and the other from near one of the edges. Luckily the AIP4WIN software can use this flat image to correct the actual image taken separately of the star field. Whew, this means the goal of achieving the +\- 0.01 magnitude goal might be achievable.

Now all I have to do next time is try out the software and see if this vignetting is close to being the last source of measurement error that needs to be accounted for. So for now it's back to the computer to process the data images taken earlier. I'm certainly appreciating some of OCA secretary and author Bob Buchheim comments and support, especially his book "The Sky is your Laboratory". He describes various experiments that amateurs can make and identifies about how many hours are required for planning, for taking the images and for doing the post processing. That's me now, doing the post processing. Thank you Bob.

Anyway, until next time, all of you physicist wannabes might enjoy a YouTube video sent in by Math Whiz Dave, which presents a parody where Hitler is rejected from various physics graduate studies programs. The video apparently uses an original movie with subtitles substituted to make fun of the process of gaining entry into advanced physics studies programs. Thanks Dave.

Until next time,

If you are interested in things astronomical or in astrophysics and cosmology

Check out this blog at www.palmiaobservatory.com

No comments:

Post a Comment