Well, yesterday I attended the local Association of Computing Machinery (ACM) meeting in Irvine, CA, where the

presenter, Dr. Justin Dressel, Chapman University, spoke to 150 attendees about “Quantum

Computing – State of Play.” This was an

excellent presentation and since I have been trying to “study” how quantum

mechanics is used to do computing for a couple of years now, I wanted to

summarize some of the takeaway points from my “student” perspective.

If you want to delve more into the computational nature of this topic you can check out at least two textbooks, both of which I use, which are “Quantum Computing – A Gentle Introduction” by Rieffel and Polak and the 2nd book “Quantum Information” by Stephen Barnett.

If you want to delve more into the computational nature of this topic you can check out at least two textbooks, both of which I use, which are “Quantum Computing – A Gentle Introduction” by Rieffel and Polak and the 2nd book “Quantum Information” by Stephen Barnett.

|

| Dr. Justin Dressel, Chapman U., speaks on Quantum Computing at ACM meeting (Source: Daniel Whelen) |

Dr. Dressel went through an introduction of quantum

mechanics, enough at least to show how the quantum bit relying on superposition

between two states I1> and I0> can be used to store much more information

than can be done with just a classical bit made up of either 1 or 0.

He talked about the nature of quantum logic gates and how by

their very nature are reversible, unlike a typical classic gate like a NAND

gate, which is not reversible. The

quantum bits (Qbits) can be evolved by circuits that perform a unitary

operation, just like in the mathematical description outlined in quantum

mechanics. So the Qbit can associate an

infinite amount of information, represented by a unit vector, pointing somewhere

on the unit Bloch sphere, but once a measurement is made, the information

available then is just a 1 or a 0.

He did not talk much about the measurement problem other

than to say that the original quantum superposition state is lost and cannot be

recovered. To me, I started wondering why

this is not another instance of “information loss” and time asymmetry. The measurement problem then essentially

means that if you try to use time symmetry after a measurement, you will not

recover the original state vector but apparently you will recover the same

statistics? Isn’t this still a situation where information is lost and cannot

be recovered? Any experts that can help work through this

question?

The speaker did not address the issue about initialization

of the Qbits, but we know that one can apply unitary operations to rotate a

given initial condition, 1 or 0, into any arbitrarily chosen position on the Bloch

sphere, so you can set up whatever superposition in the initial state vector

that you want.

He talked about some quantum algorithms like Shor’s algorithm,

which uses a quantum approach to computing Fourier transforms, in linear time,

not exponential time as for classical FFTs.

Then the algorithm can use the transforms as a short cut way of factoring

large prime numbers, which gets everyone excited because it would be a way to

crack the common RSA encryption algorithms.

But, not to worry, as I will shortly go over the speaker’s assessment of

how likely that is going to be.

But before that, he went into some of the quantum

technologies used to implement quantum computing gates and systems right now. He described the two technologies used

currently: (1) Trapped Ions and (2) Superconducting circuits.

The trapped ion approach, headed up the University of

Maryland, has achieved an arrangement of 53 Qbits. Each Qbit is represented by the state of a Ytterbium

atom, with 1’s and 0’s, represented by hyperfine spin transition states, which

can be monitored by the slightly different microwave resonance frequency

associated with each state. The atoms

are maintained in a trapped state by magnetic fields and laser beams, somewhat

similar to what we have seen in laser cooling applications. In addition, the atoms can be manipulated and

moved around in order to interact and become entangled with other atoms. He did not go into how the quantum

entanglement would be used in quantum computing.

The second approach is superconducting circuits operating at

milli-Kelvin temperatures. These circuits

seem to have a speed advantage over the trapped ion approach and can be

manipulated by electric signals and microwaves and do not need to physically

move atoms.

There are many contenders working with superconductors, including

Google, IBM, Rigetti and UC Berkeley.

Google is currently the leader with 72 Qbits in it’s Bristlecone quantum

computer chip. Just because it is a

chip, don’t get excited. Just look at

all the control circuitry needed and the multilevel cooling shelves needed and

the fact that the whole assembly is stored in an evacuated dilution refrigerator.

|

| Google Bristlecone Quantum Computer Chip Assembly (Source: Google) |

He mentioned that a third type of quantum computer using

just room temperature superconductors is being considered by a lot more research

is still needed. Something like 5-10

years to get to a point to now how this other technology will play out. Ok, if you want to run some of your own programs on a small quantum computer, and you don't want an entire dilution refrigerator taking up the space of one your cars in the garage, you can do it now, by trying out the IBM Quantum Experience at: https://www.research.ibm.com/ibm-q/

If you want some more discussion how superconductor quantum bits works, check out this Wikipedia article: https://en.wikipedia.org/wiki/Superconducting_quantum_computing

If you want some more discussion how superconductor quantum bits works, check out this Wikipedia article: https://en.wikipedia.org/wiki/Superconducting_quantum_computing

Ok, so there is a still a lot of work ahead as the number of

Qbits achievable keeps going up year after year. But what about other practical problems. He mentioned noise as the number one

problem. The noise comes about because

of environmental factors that come about because the operating environment is

not in a total vacuum and still not quite close enough to absolute zero. So, in order to get around this problem which

destroys the quantum superposition, almost like the noise makes a measurement

of the quantum state? Error correction

used to get the error rate down below say about 0.001 to about 10^-20, which is

needed for many applications, such as cracking the RSA encryption

His encryption example started with a higher level key

consisting of 2048 bits. Quantum theory

says you would need twice that number of Qbts to begin, but due to adding

quantum correcting and parity Qbits, the number rises quite quickly and he says

about 10,000 Qbits are needed for every logical Qbit originally called

for. Other factors increase the total

Qbit count for RSA encryption breaking to about 10^9 Qbits. With number of Qbits, the quantum computer has

been estimated to take just 27 hours to break one RSA encryption example. A classical computer, using the best

available factoring routines is expected to take something like 6.4 quadrillion

years. (He listed the Phy Rev A article

which describes all of this but I didn’t copy it down quick enough. Sorry.)

He guessed that within 10-15 years the technology would have

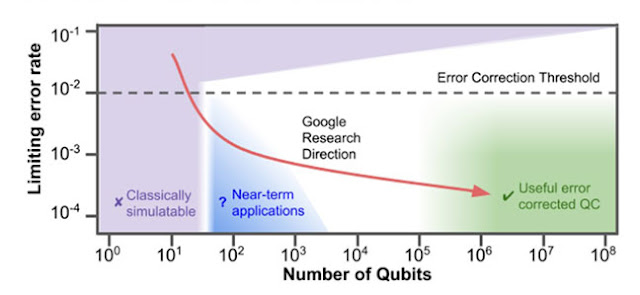

progressed enough to amass this number of Qbits. But what to do until that happens? And why is Google spending a lot of research

money in this field, give that their problem is to search through terabytes and

petabytes of data?

He said that one of the very first applications, just using

a limited number of Qbits will be to solve problems and simulation of small

quantum mechanical systems and molecules.

You need about a hundred Qbits for this.

The tradeoff is almost complete now where the possible quantum computers

of today are just about getting to be faster than the fastest quantum simulation

by classical computers. That is neat,

just use a quantum computer to do quantum mechanics. He also answered the question I had about

Google’s investment. He said that Google

hopes to use quantum computers as teaching tools to help train much larger

systems of machine learning classical computers.

|

| One indication of Google quantum computing research direction (Source: Google) |

Finally in response to question from the group, he said he had not included anything about D-Wave quantum computing status. I didn't quite get the answer, but it was something to the effect that is some controversy surrounding their announcements about quantum annealing performance. I didn't really get the answer. For those that are curious, quantum annealing refers to a quantum mechanics problem in material science having to do with calculating the minimum value of some objective function involving the states of materials

So that is my summary of the ACM meeting. It was nice to see some faces I recognized: Frank, Larry, Sam, Brian and Dr. Don. Sorry the rest of you couldn't make it. The ACM chapter served good treats and I was able to treat myself to a white chocolate macadamia nut cookie. Yumm! Maybe I'll still be able to see some of you at the UCI Physics Colloquium and the CRIPSR gene editing talk, but for now I have to get back to packing my bags for the trip to visit the VLA.

Until next time,

Resident Astronomer George

There are over 200 postings of similar topics on this blog

If you are interested in things astronomical or in astrophysics and cosmology

Check out this blog at www.palmiaobservatory.com

No comments:

Post a Comment